Ubicept Showcases Physics-Based Imaging at CES 2026 to Advance Next-Generation Robotic Perception

20 January 2026 | Interaction | By editor@rbnpress.com

In an interview with Robotics Business News, CEO Sebastian Bauer explains how rethinking image capture at the sensor level can deliver more reliable vision for robotics, autonomous systems, and industrial applications.

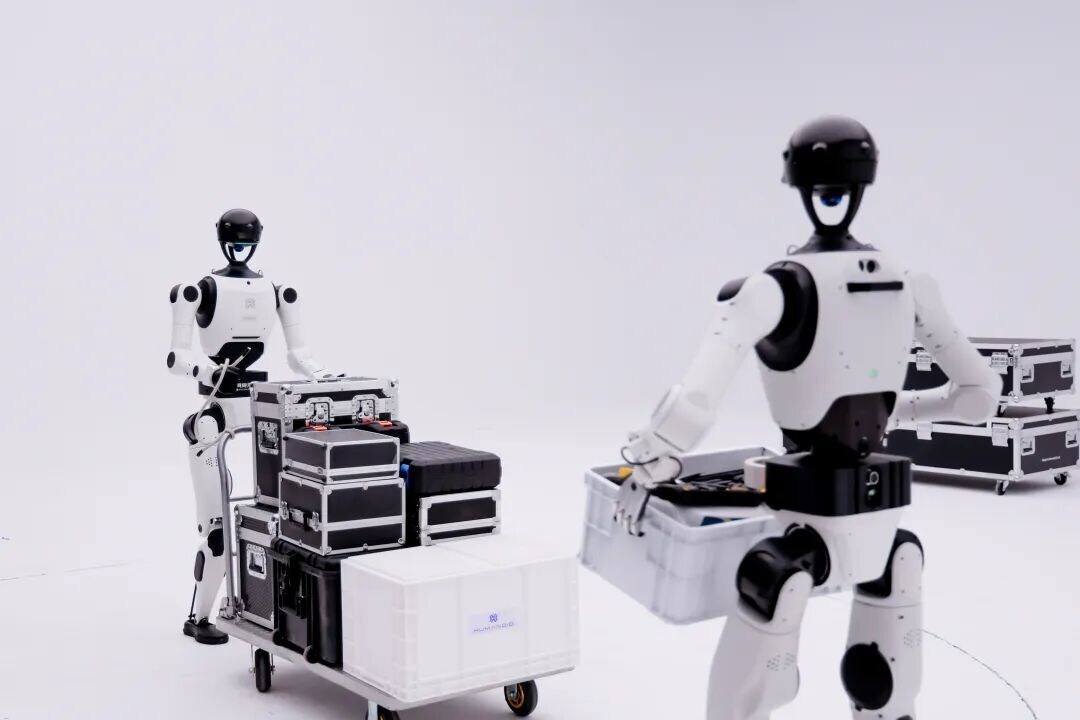

At CES 2026, Ubicept demonstrated how physics-based imaging can redefine perception performance for robotics and autonomous systems. In an interview with Robotics Business News, CEO and co-founder Sebastian Bauer explained how the company’s approach addresses a growing imbalance between rapidly advancing AI models and the slower evolution of sensing technology. By improving how visual data is captured and processed at the physical level, Ubicept aims to deliver more reliable, efficient, and scalable perception systems for robotics, automotive, and industrial applications.

What motivated Ubicept to showcase your physics-based imaging toolkit at CES 2026 , and what did you hope that debut would achieve?

Today, there’s a growing imbalance in perception systems. While the “brains” of these systems – AI models, planning stacks, and decision logic – are advancing extremely quickly, the “eyes” of these systems – the sensing and image formation side of the stack – aren’t advancing at the same pace.

Robotics, automotive, and industrial systems are asking increasingly sophisticated models to interpret data that’s fundamentally limited by how it was captured. As a result, teams spend enormous effort making models robust to poor inputs instead of addressing the inputs provided by the sensors themselves. That approach works up to a point, but it becomes fragile as systems are pushed into more difficult environments.

Ubicept exists to help rebalance that equation. We focus on enabling better eyes by operating right up to the limits of what physics allows and by preserving as much information as possible, as early as possible, in the pipeline. CES 2026 was one of the few venues where we could show that idea to sensor makers, system integrators, and application developers all at once.

We want to change how people think about perception performance. When teams designing perception systems prioritize better inputs, the intelligence downstream is built on more reliable data instead of constantly compensating for sensor limitations. That leads to perception stacks that behave more predictably in real-world conditions and are easier to deploy. That shift, along with the fact that Ubicept is uniquely positioned to support it, was the core message we wanted to send at CES 2026.

Our presence at CES 2026 also helped us gain visibility as we work toward our eventual goal of having end-to-end computer vision pipelines. This would start with the capture of individual photons all the way to the inference made by the “brain,” which is the most compute and power efficient way to do optical perception.

How does the Ubicept Toolkit’s physics-based approach improve perception performance compared to conventional vision processing methods?

Many conventional vision pipelines are still optimized primarily for visual appearance rather than perception fidelity. Some denoising is applied, but in practice it’s often limited because high-quality denoising is compute-intensive and difficult to run consistently in real time. As a result, downstream perception models are frequently expected to infer what matters from inputs that have already lost information due to averaging, clipping, or conservative processing choices.

More recently, there’s been a growing wave of AI-based image and video denoising approaches. While these can produce visually impressive results, they often ignore the underlying photon statistics of the sensor, require retraining for each sensor type and sometimes even individual sensor noise characteristics, and can introduce hallucinations that are problematic for perception systems.

What developers tend to notice first with our physics-based approach isn’t just cleaner images; it’s more predictable behavior downstream. Depth estimation becomes more stable. Tracking degrades more gracefully in low-light conditions. Temporal consistency improves, which ends up mattering a lot for motion, control, and safety.

Importantly, these gains don’t require retraining perception models or redesigning existing stacks. The improvement comes from delivering data that’s closer to physical reality. In that sense, physics-based imaging doesn’t compete with AI. It lets AI operate closer to its potential.

Can you explain how Ubicept Photon Fusion (UPF) and FLARE firmware work together to enhance image quality in real-world conditions?

UPF is the core reconstruction engine. It fuses information across time using a physics-based model of photon arrival, sensor noise, and temporal behavior. That allows the system to recover detail in shadows, manage extreme contrast, and remain stable during motion.

FLARE, which stands for Flexible Light Acquisition and Representation Engine, is what makes that reconstruction practical on real hardware. It manages timing, data flow, and compute constraints so UPF can run efficiently on embedded platforms. Without that firmware layer, you end up with algorithms that look promising offline but are hard to integrate into production systems.

Where this really shows up is in live environments. Lighting changes quickly. Motion isn’t predictable. Latency budgets are tight. In those conditions, the system isn’t guessing or hallucinating content. It’s reconstructing what the sensor is actually capable of capturing when time and physics are treated as first-class inputs.

That distinction is crucial for perception systems where confidence and consistency matter more than visual polish.

What key challenges in robotics and autonomous vehicle perception does your technology aim to address?

The most common challenge we see is brittleness. Many perception systems perform well in controlled conditions and then degrade sharply as soon as the environment becomes more demanding. Night scenes, extreme contrast, rapid lighting transitions, and fast motion tend to expose weaknesses in the sensing pipeline very quickly.

Those are also the situations where perception confidence matters most. When input data becomes unreliable, downstream systems are forced to extrapolate or fail silently. That uncertainty doesn’t stay localized; it propagates into planning and control.

Ubicept’s technology targets these failure modes by improving signal quality at the source. By increasing effective dynamic range and temporal resolution before perception models ever see the data, we reduce the number of cases where models are operating outside their comfort zone.

There’s also a practical systems benefit that often gets overlooked: better input data lets teams simplify downstream processing. That reduces compute requirements, lowers power consumption, and makes integration easier, which is essential in robotics and automotive platforms.

How do you see the integration of Ubicept’s toolkit with both CMOS and SPAD sensors accelerating advancements across industries?

Most industries aren’t going to transition from CMOS to SPAD sensors overnight. CMOS sensors are mature, widely deployed, and deeply integrated into existing products. SPAD sensors introduce new capabilities, but they also bring new data characteristics and system challenges.

We designed the Ubicept Toolkit to span that transition rather than force a choice. With CMOS sensors, we extract more usable information from the hardware that companies already deploy today. That delivers immediate value and lowers the barrier to adoption.

With SPAD sensors, the opportunity is even larger. Single-photon sensitivity and precise timing open up new perception capabilities, e.g., by eliminating the need for extra sensors like LiDAR, but only if the data can be reconstructed efficiently and accurately. Physics-based imaging is a natural fit for that regime.

By supporting both sensor types within a single framework, developers can build hybrid systems, experiment safely, and evolve their products over time. That continuity is critical if new sensor technologies are going to move from research into production.

In what ways does partnering with camera manufacturers and developers, such as the Canon SPAD demo, validate or expand your vision for smart perception?

Technology partnerships are central to how we think about advancing perception, because no single company builds the entire sensing stack alone. Perception performance emerges from sensors, firmware, and algorithms working together, so close collaboration across those layers is essential.

The real value of these collaborations shows up in validation. Working with different sensor partners lets us test our approach across a range of hardware designs, operating conditions, and application requirements, rather than optimizing for a single device or use case.

Together, these partnerships reinforce our view that smart perception emerges from coordination across the ecosystem. When sensor developers, camera manufacturers, and processing technology providers work closely together, perception systems become both more capable and more practical to deploy.

What feedback have you received from early adopters or developers about the impact of physics-based imaging on their perception systems?

One of the most consistent pieces of feedback is improved system stability. Developers report fewer perception dropouts and more consistent outputs across challenging conditions. That tends to matter far more than incremental improvements in visual quality.

Another thing we hear often is about efficiency. With better input data, teams find they can simplify parts of their pipeline. They rely less on heavy post-processing and defensive modeling techniques, which speeds up development and makes deployment easier on constrained hardware.

What’s been especially interesting is how it changes how teams think about the problem. Instead of asking how to compensate for sensor limitations downstream, they start asking how to preserve information upstream. That shift in mindset is a strong signal that physics-based imaging is addressing a real gap.

Looking ahead, what are Ubicept’s next milestones for commercial deployment, ecosystem partnerships, and scaling beyond CES 2026 demonstrations?

CES 2026 was a starting point, not an endpoint. The next phase is about translating what we showed into real deployments. That includes expanding commercial pilots, deepening integrations with sensor partners, and moving from evaluation environments into production systems. A few years ago, we anticipated that SPAD sensors would begin replacing CMOS sensors. What we’re seeing across the market today provides clear evidence that this shift is well underway.

We’re also focused on ecosystem partnerships. Perception doesn’t exist in isolation, so close collaboration with platform providers, perception stack developers, and system integrators is critical.

Longer term, our goal is for physics-based imaging to become a standard part of perception system design. If CES 2026 helped establish that direction, the work that follows is about making it routine rather than exceptional.