Apera AI Leaders on Accelerating Vision-Guided Robotics with Vue 9.52 and 4× Faster AI Training

13 February 2026 | Interaction

Sina Afrooze and Jamie Westell discuss how Apera Vue 9.52, Programmable Autopilot, and 4D Vision are transforming deployment speed, reliability, and factory automation at scale.

In this exclusive interview with Robotics Business News, Sina Afrooze, Chief Executive Officer and Co-founder, and Jamie Westell, Director of Engineering of Apera AI, explain how the latest release of Apera Vue 9.52 and accelerated AI training in Apera Forge are reducing deployment friction, improving robot motion predictability, and enabling more reliable automation in highly variable manufacturing environments. The discussion explores the future of 4D Vision, Physical AI, and the shift from rigid programming to intelligent, perception-driven robotics.

What key customer challenges were you targeting with the release of Apera Vue 9.52 and the faster Apera Forge AI training?

Across deployments, we consistently saw two challenges slow teams down. The first is the time required to get a cell from installation to stable production. Even when AI vision performance is strong, friction in setup, robot accuracy issues, and unexpected collisions from things like part slippage can stretch commissioning timelines. Apera Vue 9.52 makes a step improvement in the setup experience by highlighting problems in the accuracy stack so teams can reach reliable picks faster, and by giving users the tools to fine-tune robot path planning, optimized for the application.

The second challenge is iteration speed. Real production environments always surface edge cases, whether that is new SKUs, a new bin presentation, or part variation. Faster AI training in Apera Forge shortens the loop between identifying a need, training a new or improved asset, and redeploying it. With this release, Forge training can complete in as little as 6 hours, and in under 24 hours for the vast majority of training runs, which changes how quickly integrators can prove out an application and converge on stable performance.

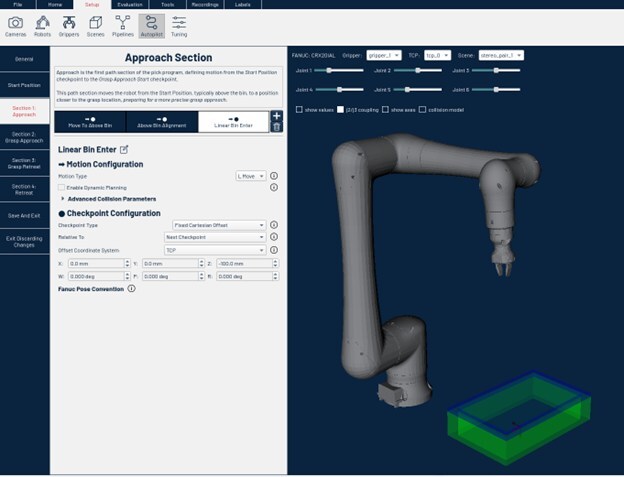

How does the new Programmable Autopilot feature improve robot motion planning and safety compared to previous releases?

Programmable Autopilot represents a move away from one-size-fits-most behavior toward explicit, reviewable intent. Out of the box, Autopilot optimizes paths for the fastest cycle time, a convenient feature for new users and to support the fastest deployments. With Apera Vue 9.52, users can now modify the robot path optimization objectives to match the physical realities of their application.

Practically, that means users can insert motion segments with linear movement as an option and define motion relative to the bin or parts so the robot clears bin walls and avoids obstacles more deliberately. The safety benefit is predictability: motion is more constrained by design, exception handling is predictable, and the system behaves consistently across shifts and sites, which is preferred when the objective is long-term uptime with no performance surprises.

In what ways do the new features in Vue 9.52 help reduce deployment time and integration complexity on the factory floor?

Most deployment delays come from repeated commissioning loops, where teams adjust something, test, learn what is still off, and repeat. Apera Vue 9.52 makes the system faster to deploy, easier to diagnose when something is not right, and more precise in describing accuracy issues.

On the setup side, Vue adds an Auto-Configure Cameras flow that simplifies camera networking, and calibration now auto-detects the pattern board when an Apera model is used. On the integration side, Programmable Autopilot reduces the amount of bespoke robot programming needed to achieve safe, predictable motion in real cells. Together, these improvements reduce on-site iteration and help integrators standardize deployments rather than reinventing a new approach for every line.

Apera Forge now delivers up to 4× faster AI training. What engineering innovations made this possible?

With the latest release of Apera Forge, asset training is up to 4X faster, completing in as fast as six hours. Forge trains an AI neural network with synthetic parts through a million digital cycles to achieve >99.9% reliability in recognizing objects and performing tasks—delivering a complete vision program ready to deploy on the plant floor in just six to 24 hours. It’s never been faster for users to deploy 4D Vision across their plants.

The landscape of AI technology is moving at breakneck speed, driven largely by the success of LLMs following the introduction of ChatGPT. By building on many software and hardware advancements related to AI technology in recent months, we were able to make a step change in the training experience for our users with zero compromises in quality, which is how we can deliver up to 4X faster training in practice.

From the user’s perspective, the key outcome is faster training and faster iteration. Teams can evaluate new approaches, parts, bins, or variations much more quickly, which is an integrator’s dream. It changes the rhythm of a project from “wait and see” to “iterate and converge,” and that directly reduces deployment risk.

Can you explain how deeper diagnostic insights like robot mechanical error histograms impact long-term reliability and maintenance planning?

As vision-guided automation scales, the ease of maintenance of the solution becomes an important long-term value to customers. Diagnostic tools like the robot mechanical error histogram help make subtle performance regressions visible and measurable, allowing maintenance engineers to see the impact of mechanical wear or drift over time.

When running hand-eye calibration, Apera Vue can now show a histogram of the robot’s intrinsic mechanical error. That helps teams distinguish between calibration and robot mechanics, and it can indicate when a robot likely needs re-mastering. Over time, this kind of signal supports more proactive maintenance, faster troubleshooting, and fewer ongoing performance optimization.

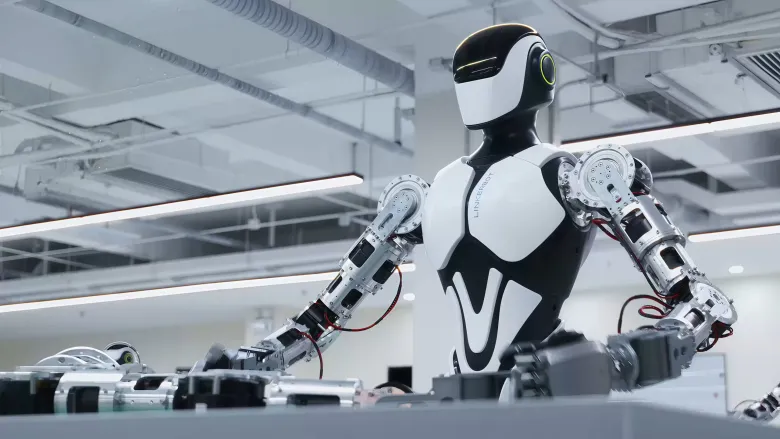

How does 4D Vision technology empower robots to perform more reliably in variable production environments like shifting racks or unstructured bins?

Factory environments are full of variability. Lighting changes, parts look different from batch to batch, surfaces wear, bins shift, and rack/bin presentation can vary even when the upstream process is “the same.” 4D Vision is built to be resilient to that reality by combining strong 3D perception and artificial intelligence with additional visual context so the system does not depend on a narrowly defined, perfectly repeatable scene.

The practical benefit is robustness. Robots can tolerate differences in lighting, part appearance, orientation, and presentation without constant re-tuning. Customers see more consistent performance across longer production runs and fewer cases where reliability slips simply because the environment does not look exactly like it did during commissioning.

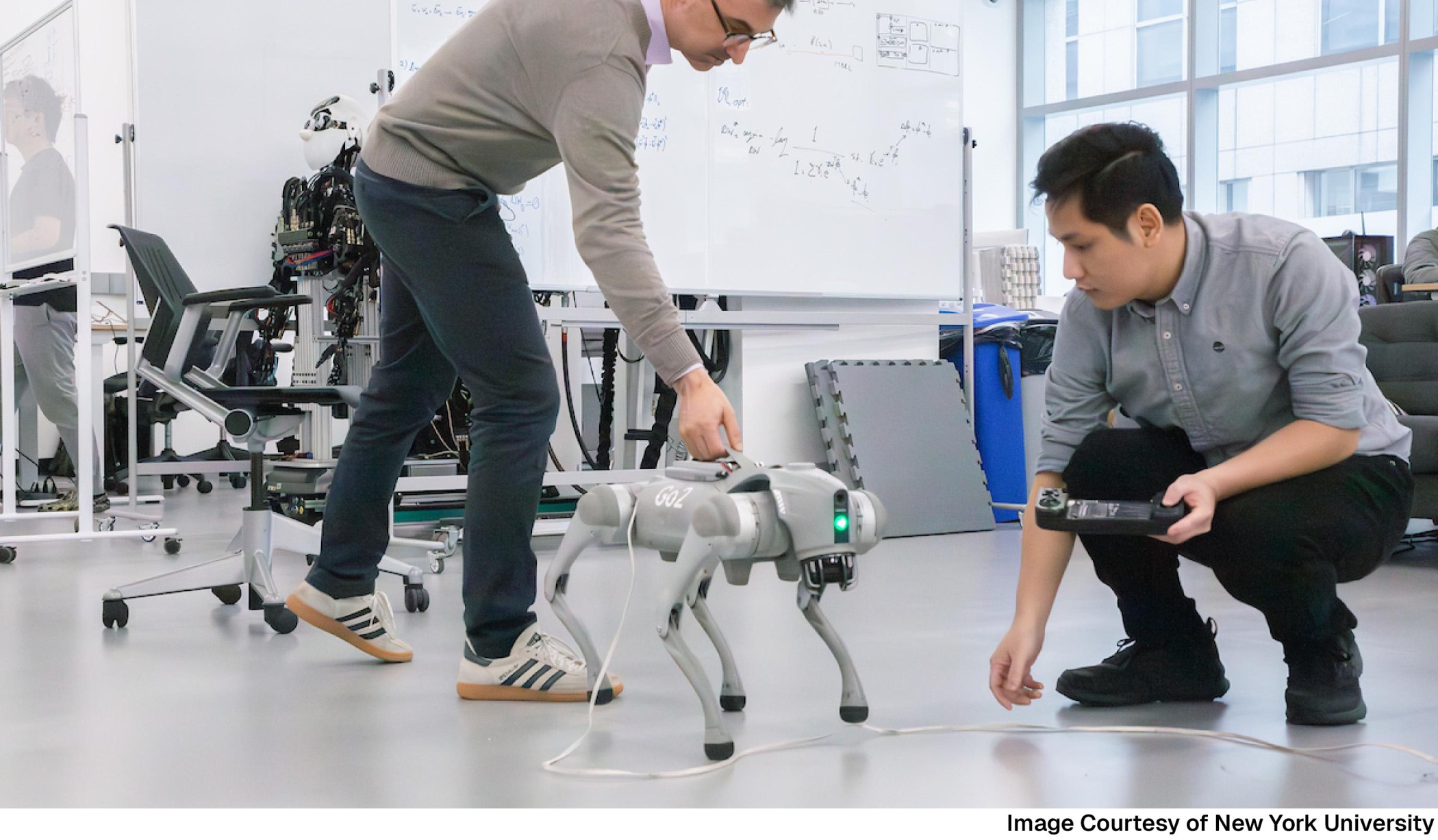

Which industries or use cases are seeing the strongest adoption of 4D Vision-guided automation today?

We see the strongest pull in industries where variability is unavoidable and the value of flexibility is high. Automotive and automotive suppliers are a major example, especially in applications like bin picking, de-racking, kitting, and handling stamped parts where presentation, lighting, and surface appearance can vary. Today, we serve North America’s top 6 automotive OEMs and their Tier 1 suppliers. Metals, plastics, and general manufacturing also show strong adoption.

The use of AMRs in manufacturing has risen, partially thanks to 4D Vision enabling solutions to adapt to variability in pallet and bin placements.

Across these segments, the common thread is a desire to automate tasks that used to be considered “too variable” for robotics without resorting to expensive fixturing. 4D Vision-guided automation is most compelling where customers want to reduce custom mechanical design and still maintain predictable throughput and uptime.

Looking ahead three to five years, how do you see vision-guided robotics transforming manufacturing and automation at scale?

Over the next three to five years, we expect manufacturing robots to shift from rigid, pre-scripted programs to vision-guided automation powered by Physical AI. Instead of relying on brittle, hand-tuned sequences, robots will increasingly reason about what they see and decide how to move in real time, handling variability, avoiding corner cases, and recovering gracefully when the environment changes. The result is higher reliability with far less intervention, less downtime, and a step-change in what can be automated across sites.

In parallel, the way automation gets designed and deployed will be transformed. AI will compress the concept-to-production cycle by generating and evaluating cell designs, process flows, and motion strategies orders of magnitude faster than today’s engineering-heavy approach. As the vision and motion stack becomes more capable by default, traditional robot programming will largely give way to AI-driven behavior, where every motion decision is informed by perception, context, and learned best practices rather than manual teaching and endless tuning.